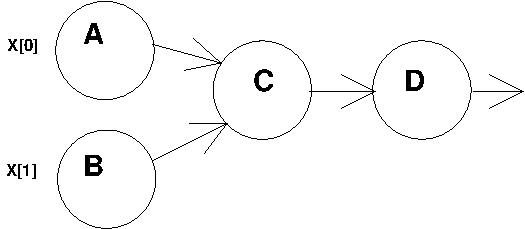

Should be 1 was 0.46285498

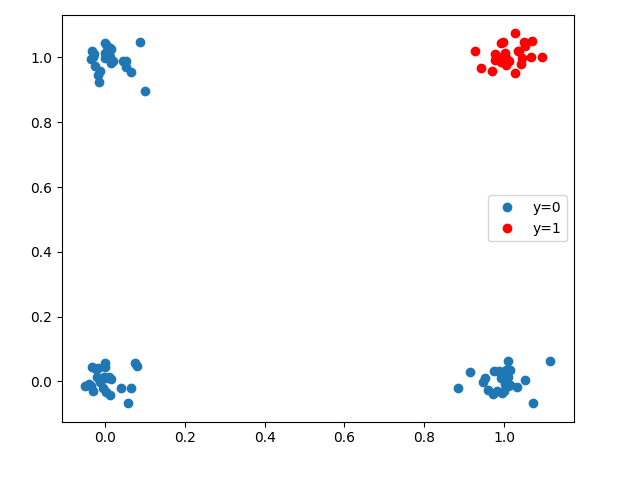

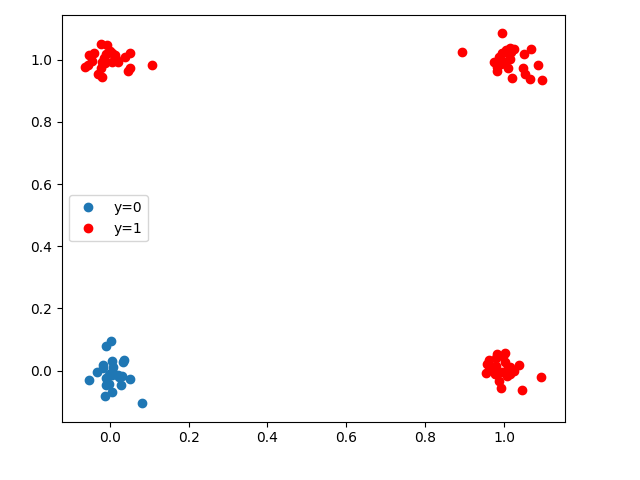

XOR with 1 neuron should FAIL: FAIL

OrderedDict([('linear1.weight', tensor([[-8.6583, -9.2757]])), ('linear1.bias', tensor([1.5032])), ('linear2.weight', tensor([[-8.0780]])), ('linear2.bias', tensor([-0.1426]))])

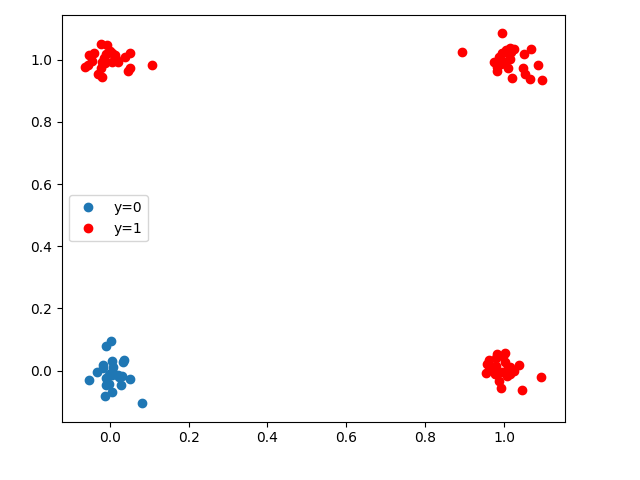

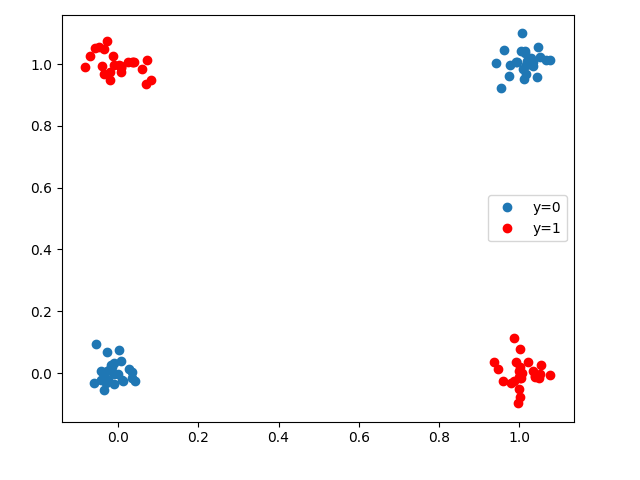

XOR with 2 neurons should PASS: PASS

OrderedDict([('linear1.weight', tensor([[-7.3292, -7.5733],

[-5.9858, -6.1268]])), ('linear1.bias', tensor([3.2565, 9.0655])), ('linear2.weight', tensor([[-13.7802, 13.4591]])), ('linear2.bias', tensor([-6.4077]))])

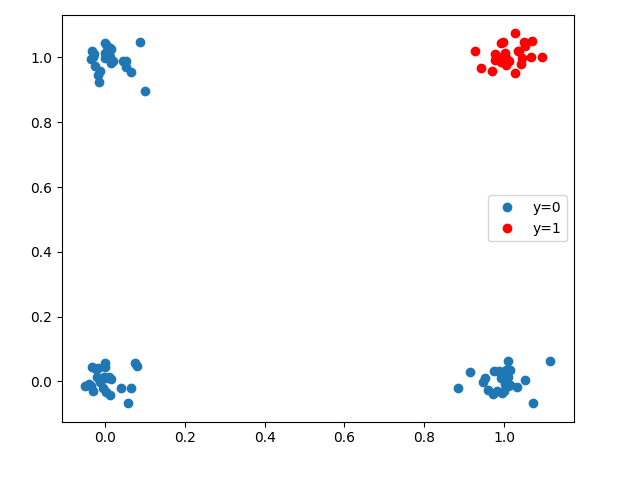

AND should PASS: PASS

OrderedDict([('linear1.weight', tensor([[-5.6573, -5.7037]])), ('linear1.bias', tensor([8.0903])), ('linear2.weight', tensor([[-14.9732]])), ('linear2.bias', tensor([6.5046]))])

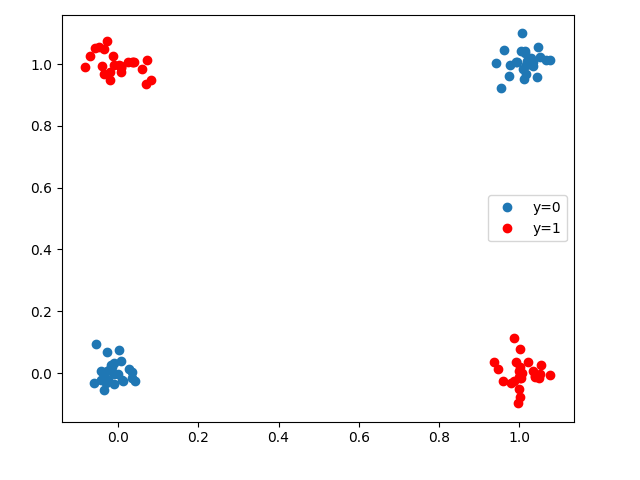

OR should PASS: PASS

OrderedDict([('linear1.weight', tensor([[6.6210, 6.6750]])), ('linear1.bias', tensor([-3.4170])), ('linear2.weight', tensor([[14.3268]])), ('linear2.bias', tensor([-6.4032]))])

|